Phishing continues to be a hot-button issue and a prevalent worry for many, so let's keep this conversation alive. In a recent installment of Tradecraft Tuesday, our very own Chris Henderson, Huntress Sr. Director of Threat Operations, sat down with teammate and Huntress Sr. Product Researcher Truman Kain to discuss the ever-present and always-evolving threat that is phishing. As a quick recap, phishing is a social engineering tactic in which threat actors try to trick you into divulging sensitive information or performing actions that could put you or your organization at serious risk.

In this installment of Tradecraft Tuesday, topics include current events in phishing as well as in-depth demos around phishing, smishing (“SMS phishing”), and even vishing (“voice phishing”) using AI. So settle in, this in-depth tradecraft coverage is sure to provide new insights that’ll bring you a new, attacker-level perspective to your defenses.

You can find the full episode (plus transcripts for our copious note-taker crowd!) here. Now, to recap this episode and provide you with the “highlight reel” around all things phishing.

Phishing in Current Events Gets Even Fishier

Together, Henderson and Kain cover recent, costly phishing incidents like a $25M heist that used deepfaked video and cloned voices of a victim’s own coworkers to create what seemed like legitimate, real-time video and audio. The result of this sketchy effort? The finance worker in question completed a wire transfer to a deepfake executive who successfully impersonated the victim’s coworkers. In a word: unreal.

Headline from CNN

But in another word: reality. The harsh truth is that events like these show that phishing and vishing are evolving to take advantage of how we’re all used to communicating—particularly at work and when it comes to sensitive actions like transferring funds. Incidents like these highlight the need for additional measures like securing authorization through a portal with multi-factor authentication (MFA) enabled, thus preventing the completion of actions that might seem completely legitimate in the face of an uncanny deepfake.

In another instance, an advanced persistent threat (APT) group recently used weaponized PDFs to abuse Windows protocols and execute malware on victims’ machines. This example highlights how easily adversaries can capitalize on a victim merely clicking a link—regardless of whether they input any credentials at all. By leveraging the ways legitimate software or programs work as a vessel, attacks can easily be carried out without much user participation.

AI Adds a New Layer to Phishing and Vishing Complexity

Security budgets are tightening up—and that coincides with a consistently lower “barrier to entry” for threat actors who want to leverage phishing or vishing to target organizations. And for organizations using basic education and precautionary training measures, staying ahead of social engineering attacks is becoming harder.

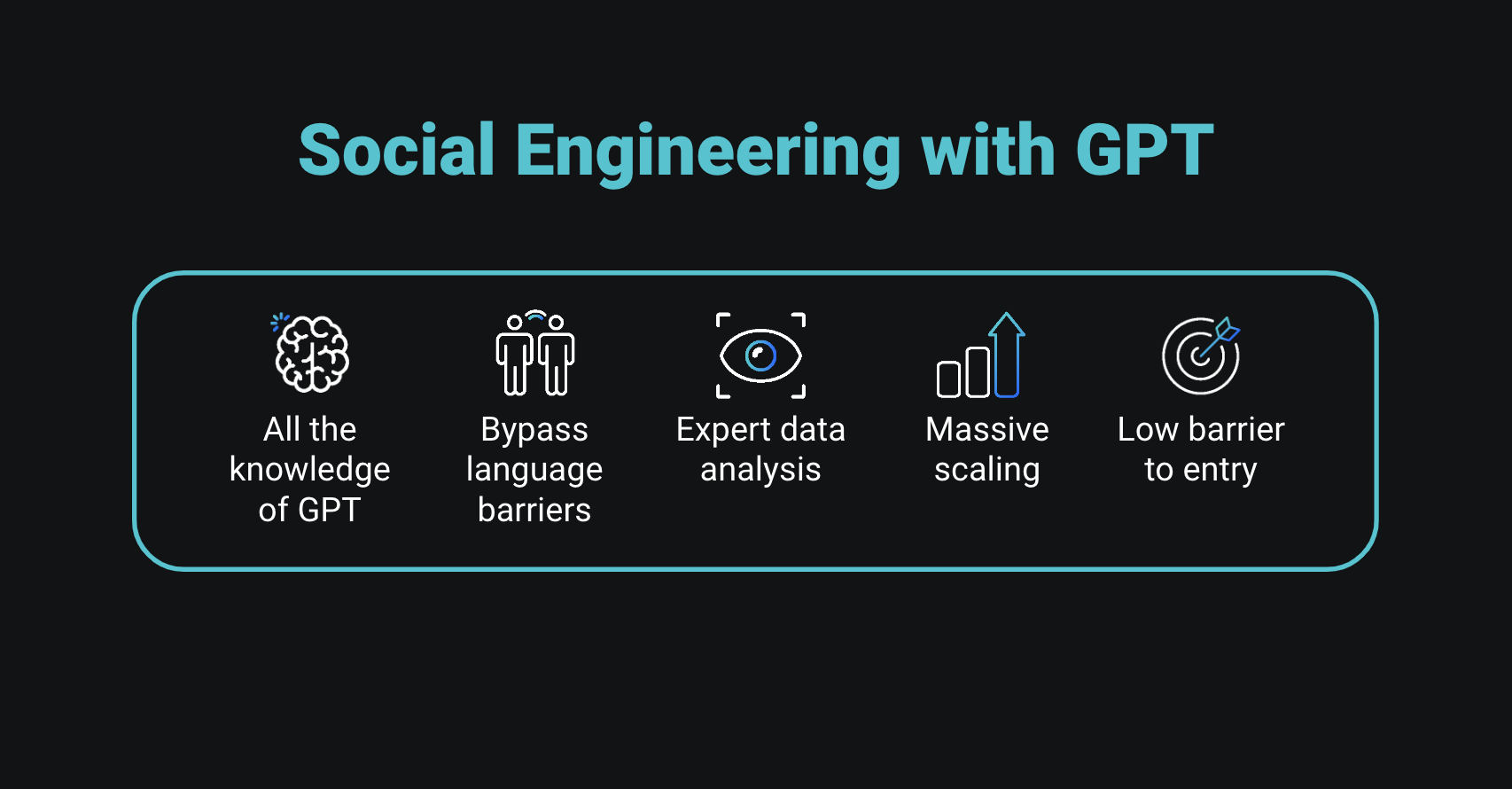

Through quick-fire demos, this episode walks you through a picture of how phishing has moved into the fast lane. And with large language models (LLM), social engineering is becoming even easier because:

- Attackers get all the knowledge of the AI model

- Threat actors can easily bypass language barriers, and translations become instant

- Attackers can take advantage of expert data analysis

- This approach allows adversaries to easily scale up their efforts

Attackers can now leverage AI to fast-track social engineering attacks across phishing, vishing, and smishing—with very little knowledge or preparation before successfully executing these attacks. All they need to do is access an LLM.

Social engineering with GPT

ChatGPT and other AI tools now empower threat actors with a dynamic level of flexibility, allowing them to adapt phishing, smishing, and vishing well beyond previous limitations. Today’s attacks can include long-running conversations over SMS that—again—feel very legitimate and above board to the target victim. Combined with new features like voice cloning and emotion detection, these efforts will start looking more and more life-like and undetectable to targets.

This episode covers demonstrations of our own exercise and prompts for creating a GPT-powered phishing campaign to demonstrate how easy this is.

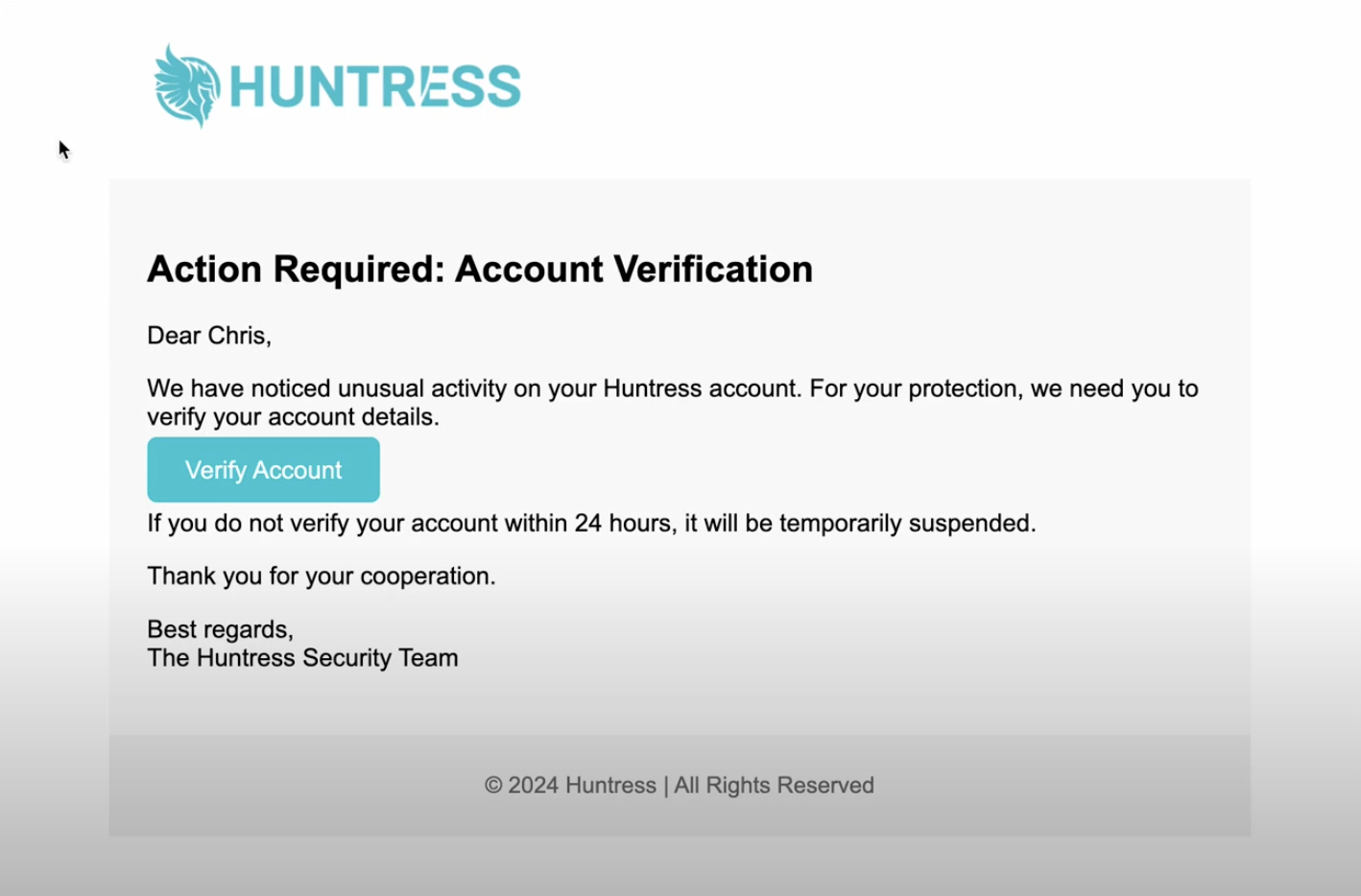

An example of a phisihing email. Notice the urgent language and uneven spacing among the text and the "Verify Account" button.

Casting a Wide Net with ChatGPT

With a basic prompt including names, job titles, companies, and vendors used by Huntress, our own Truman Kain was able to create an excellent approximation of just how easily attackers can leverage an LLM to create a convincing phish quickly. While a trained eye could spot minimal differences between in-house style and branding (like spacing in the sentences pictured above), the result is a very close depiction of a legitimate Huntress communication.

And it was all produced using a basic ChatGPT prompt in about five minutes.

Across phishing, smishing, and vishing, AI produced a universal result: it was hard for Huntress’ own experts to spot differences between GPT-created attacks and legitimate business communication.

Particularly interesting: LLM could improvise and add compelling elements to these types of communications without the prompt including it. These elements ranged from emphasis on the urgency of a matter that a target needed to resolve (a past-due account, for example) to especially emotive speech captured in an AI-enhanced vishing attempt. These intuitive capabilities in AI are helping attackers cast a wider net than ever before.

Vishing Gets a Boost from AI

In a short vishing demo using AI, Kain reviewed an exercise using inexpensive programs like ElevenLabs to adapt known voices—like that of Huntress CEO and co-founder Kyle Hanslovan. Using sliding controls, Kain was able to fine-tune a short clip to create a frighteningly close impersonation.

Today’s voice cloning allows attackers to:

- Create a virtually indistinguishable impersonation

- Use a clip as brief as 15 seconds in length

- Go around real-time limitations to leave voicemails that sound truly legit

- Access multilingual capabilities to get around language barriers

With just 2MB of audio (about 70 seconds), our team was crafting a convincing copycat voice within minutes. Equally impressive and disturbing, it’s easy to see how a highly motivated threat actor could chase a large payday using these intuitive and convincing tools.

The Redux Version: Nothing is Sacred

Even landing pages aren’t safe. With tools like SingleFile, attackers can now convert these pages into cloned versions that act as the perfect accessory to add to the “AI-powered” tacklebox of tools. Stripping away unnecessary scripts and converting remote images and resources, these cloning tools easily push a download to the attacker containing the cloned website of their choice.

All they need to do is figure out how to get you to bite. From using landing page delay to leveraging MFA capture with programs like Evilginx, this lightning round of demos serves as a cautionary tale for organizations navigating reduced cybersecurity budgets in a world chock-full of evolving attack methods.

And, with LLMs helping even below-average attackers sharpen their arsenal of tools and tactics, the tide will continue to turn to favor threat actors—and imperil small enterprises and MSPs.

To watch this recording or other Tradecraft Tuesday episodes, check out our on-demand page and register for the next episode so you don’t miss a second of this valuable insight.