If you’ve ever been on a cruise, you might recall looking at the ship's list of daily activities and becoming overwhelmed. You probably found everything from Star Wars trivia to competitive ballroom dancing to late-night movies. With all that was available, you were likely left wishing for an easier way to filter your options into a more manageable list of the most relevant events.

Security information and event management (SIEM) may not be a cruise (though sometimes we wish it was), but it does manage a large list of log data it ingests. Much like our activities scenario, it must prioritize extensive lists and notify only of the events that matter most. If it sounds daunting, that’s because it is. Fortunately, there’s a way to make SIEM smarter at this task.

If you run a quick search online for “log-to-alert ratio,” you’ll quickly find funnels showing the flow of logs from raw data to parsed logs to security events to incidents and, finally, to alerts. While these can be impressive, we’re all left with one question—why do we start with so much data in the first place?

The log-to-alert funnel is needlessly complex. Typically, terabytes of raw data flood in, and it all gets filtered down into a handful of alerts. Instead of sorting through all that noise, wouldn’t it make more sense to start with a cleaner, more condensed version of raw data already tuned to actionable insights?

Let's explore some ways we end up bulking up our ingested log data without even knowing it.

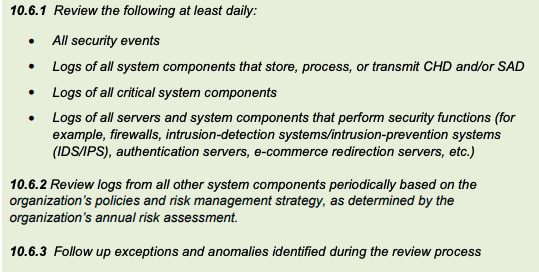

Let's start with one of the common ones—compliance. A quick search on the Payment Card Industry Data Security Standards (PCI DSS) compliance reveals the following, which was taken from the PCI Security Standards.

While this may seem straightforward enough, diving a bit deeper we realize not all is as it seems. According to section 2.1.1 of the PCI Security Standards:

The PCI DSS Glossary defines a security event as “an occurrence considered by an organization to have potential security implications to a system or its environment. In the context of PCI DSS, security events identify suspicious or anomalous activity.” Examples of security events include attempted logons by nonexistent user accounts, excessive password authentication failures, or the startup or shutdown of sensitive system processes. Unfortunately, determining the specific types of events and activities that should constitute security events is largely dependent on each individual environment, the systems resident in that environment, and the business processes served by that environment. Therefore, each organization must define for itself those system events and activities that represent “security events.”

We immediately realize the underlying problem: specific examples of "security events" are hard to define. One of the common questions asked during most SIEM product demos is, “Can you tell or show me what I should log for compliance?” All too often, the answer is “everything.” However, looking at section 10.6.1, the consensus is always collect from specific components and anything else you deem as a security event.

While collecting everything can seem like an ideal solution, compliance mandates don’t require this. Plus, the law of diminishing returns determines how much of the data will be useful during a possible security event. Except for a very small subset of compliance regulations, storage of all data across the board isn't required.

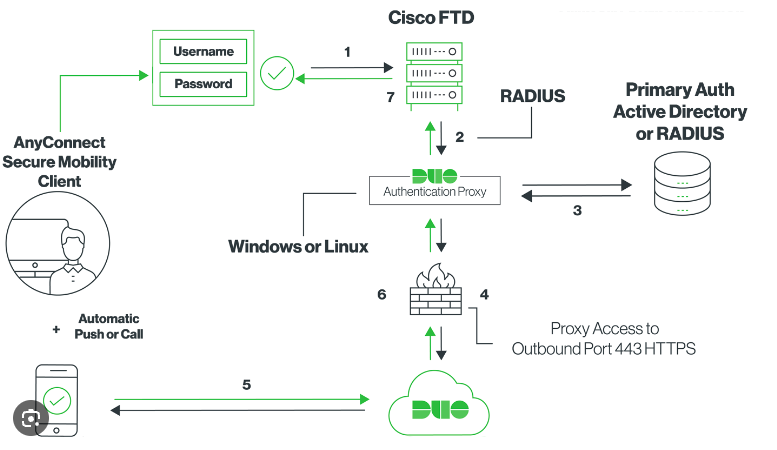

Debug logs can ultimately bug you. This is another overly collected type of log data. While some of the data can be valid, there’s often log bloat that may be hidden at first glance. Let’s take a VPN connection for example. When troubleshooting potential network issues with a VPN, multiple steps occur as your computer connects to the VPN server, routes out to your requested destination, and back toward you. Duo Security lays it out pretty clearly, as you can see below.

Diving into this picture, there are two areas that would clearly be classified as security events:

Outside of those two events, while the rest of the debug data might be interesting, the security value quickly diminishes. That’s not to say that your friendly neighborhood networking team wouldn’t find these of value. But from a security practitioner’s perspective, the core of the investigation revolves around the two above log points. Much like our compliance example, while we can store the entire depth of the debug data in SIEM, from a security perspective, filtering out the unnecessary log data would help us identify problems more quickly.

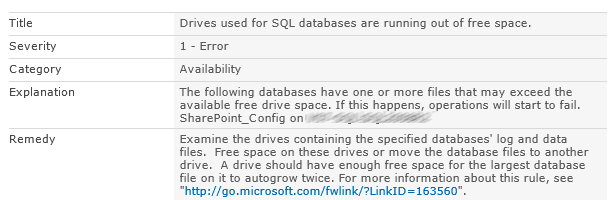

When your endpoint or server flags its disk is full, lets you know what services are available, or informs you of the overall performance of your device, that’s IT Ops logs doing their thing. They’re useful for day-to-day operations—whether you’re upgrading your SSD for a sweet new heftier one or just monitoring the general performance of various services. But their relevance to security is a different story. Imagine wading through your SIEM alerts to protect your company, only to get bombarded with repeated pings from these logs.

Is it important to add space to your database? Sure, give that SQL database some more space as the event alert calls for. While this could be a potential security problem if caused by a space filler malware, it does not fulfill the need for detection, investigation, or compliance requirements. Knowing that, how pertinent are the full disk ops logs to store in SIEM? Probably not a high priority, especially if you’re already using service availability monitoring tools that are dedicated to giving you this information firsthand. The argument could be made to want to centralize all the data into one SIEM platform. The question then becomes, is the purpose of your SIEM simply a log repository? However, if your SIEM is needed as a security-focused platform targeting threats, then feeding it data that other tools can handle may not be the best choice.

With all the excess data floating around, what are the industry's common practices to address this? You may be surprised.

If you had to put away some of your belongings, you might take it to a storage company that would allow you to store your items at a set price. But let's suppose that the storage company then insists you store everything you own—furniture, refrigerator, cars—in their facilities instead of allowing you to keep it at home. Worse yet, anything you buy moving forward must also be stored with them. Seems a little excessive and maybe a bit silly, but this happens daily with SIEM vendors. The market approach to SIEM is to collect every log possible and ship that to them. Why? Because if they charge for data ingested, then the more you send the more they can charge. It’s no surprise that vendors encourage and refrain from dissecting what really should be collected and what shouldn’t be. It doesn’t make business sense to do so, and it’s one of the reasons small and mid-sized businesses (SMBs) have been priced out of the SIEM market for years.

Tuning is a common practice across SIEM vendors today. It’s all about altering detections—by deciding what stays and what goes—to reduce false positives and improve accuracy. This normally occurs right after deployment and can take anywhere from two weeks to several months. In the end, data is boiled down, analyzed, and stored or made available for search queries. But here’s the thing—this approach overlooks the idea of filtering data before it’s collected, and there’s one basic reason: cost. Big data companies need big data costs to thrive. It’s no surprise that tuning is done after the consumer has incurred the expenses.

Is there a better way to avoid passing costs to you while still adhering to compliance requirements and security best practices? Of course.

With all the ways to increase log data into SIEM, we set out to build a platform tailored to address excessive log data and its associated pricing. The result is Huntress Managed SIEM.

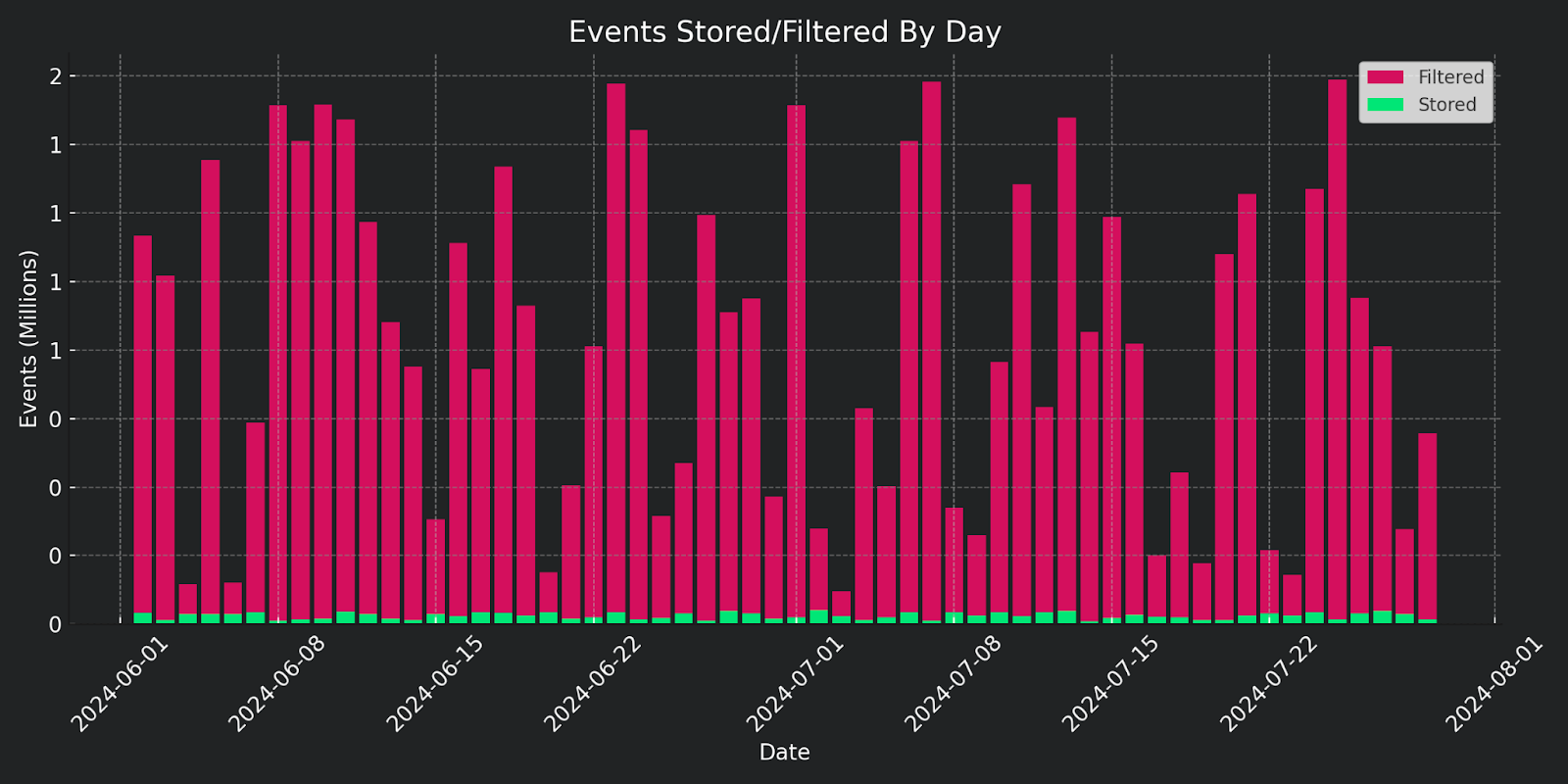

Remember the log-to-alert ratio funnel we talked about earlier? Our Smart Filtering slims down starting at the top of the funnel, cutting down the noise before moving to hot storage. The first layer of filtering reduces the log data coming in, but this doesn’t mean we just omit anything and call it a day. We’ve done our homework, analyzing the data we’ve collected and reviewing event types generating the most noise. In our Early Adopter version of Huntress Managed SIEM, we filtered out over 20 Windows event types.

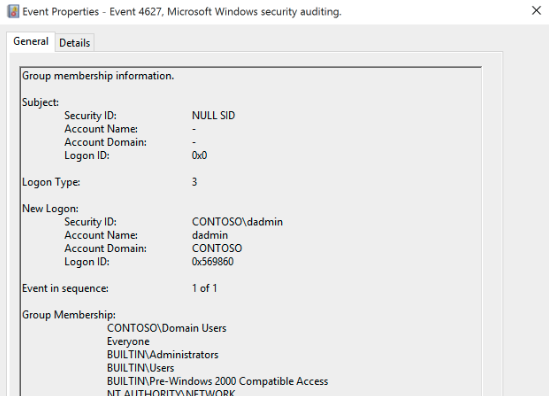

For example, let's look at Windows Event ID 4627: Group Membership Information.

While this event allows users to see what groups a user is assigned to, logging and storing them using SIEM only adds an excess amount of data. This might matter to the network engineering team, but the overall value to security is minimal. If there’s an escalation of privileges, it would be tracked through the actual event itself instead of using a log event like the above. And since the endpoint detection and response (EDR) agent tracks primary privilege escalations of events, storing a noisy log like ID:4627 in the SIEM platform just increases the bloat on log storage and, of course, cranks up the cost.

The second layer of filtering occurs before passing on to storage using our filtering rulesets. This second pass at filtering eliminates any noise not discovered during the top-of-the-funnel phase. Using our Smart Filtering rulesets, we can eliminate non-security events, providing the SIEM with clean subsets of data to later perform threat detection.

Tuning still comes into play using Managed SIEM, but our tuning process happens as close to the data source as possible. By ensuring that we cut out excessive noise at the source, we can adjust log data for an influx of logs that would be informational at best. Tuning pre-collection keeps things smooth, so we’re not dealing with excess false positives after the fact.

For too long, SIEM has been unreachable for most, being too costly, too noisy, or too complex to manage. Huntress Managed SIEM changes all that by reducing the price of entry, leveraging Smart Filtering, and providing a fully managed platform. External stakeholders—whether compliance auditors or cyber insurance providers—now require SMBs to provide SIEM functionality to meet these requirements. Huntress wants to help you meet these requirements head-on.

Get insider access to Huntress tradecraft, killer events, and the freshest blog updates.